| 2020 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2019 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2018 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2017 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2016 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2015 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2014 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2013 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

| 2012 | ||

|---|---|---|

| Months | ||

| Jan | Feb | Mar |

| Apr | May | Jun |

| Jul | Aug | Sep |

| Oct | Nov | Dec |

What has been done

1. Verified basic ptrace(2) functionality:

- debugger cannot attach to PID0 (as root and user)

- debugger cannot attach to PID1 (as user)

- debugger cannot attach to PID1 with sercurelevel >= 1 (as root and user)

- debugger cannot attach to self

- debugger cannot attach to another process unless the process's root directory is at or below the tracing process's root

2. Verified the full matrix of combinations of wait(2) and ptrace(2) in the following test-cases

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP followed by _exit(2)

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP followed by _exit(2), with perent sending SIGINT and catching this singal only once with a signal handler and without termination of tracee

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP, with perent sending SIGINT and terminating the child without signal handler

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP, with perent sending SIGINT and terminating the child without signal handler

- tracee can emit PT_TRACE_ME for parent and raise SIGCONT, and parent reports it as process stopped

- assert that tracer sees process termination earlier than the parent

- assert that any tracer sees process termination earlier than its parent

- assert that tracer parent can PT_ATTACH to its child

- assert that tracer child can PT_ATTACH to its parent

- assert that tracer sees its parent when attached to tracer (check getppid(2))

- assert that tracer sees its parent when attached to tracer (check sysctl(7) and struct kinfo_proc2)

- assert that tracer sees its parent when attached to tracer (check /proc/curproc/status 3rd column)

- verify that empty EVENT_MASK is preserved

- verify that PTRACE_FORK in EVENT_MASK is preserved

- verify that fork(2) is intercepted by ptrace(2) with EVENT_MASK set to PTRACE_FORK

- verify that fork(2) is not intercepted by ptrace(2) with empty EVENT_MASK

- verify that vfork(2) is intercepted by ptrace(2) with EVENT_MASK set to PTRACE_VFORK [currently EVENT_VFORK not implemented]

- verify that vfork(2) is not intercepted by ptrace(2) with empty EVENT_MASK [currently failing as EVENT_VFORK not implemented]

- verify PT_IO with PIOD_READ_D and len = sizeof(uint8_t)

- verify PT_IO with PIOD_READ_D and len = sizeof(uint16_t)

- verify PT_IO with PIOD_READ_D and len = sizeof(uint32_t)

- verify PT_IO with PIOD_READ_D and len = sizeof(uint64_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint8_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint16_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint32_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint64_t)

- verify PT_READ_D called once

- verify PT_READ_D called twice

- verify PT_READ_D called three times

- verify PT_READ_D called four times

- verify PT_WRITE_D called once

- verify PT_WRITE_D called twice

- verify PT_WRITE_D called three times

- verify PT_WRITE_D called four times

- verify PT_IO with PIOD_READ_D and PIOD_WRITE_D handshake

- verify PT_IO with PIOD_WRITE_D and PIOD_READ_D handshake

- verify PT_READ_D with PT_WRITE_D handshake

- verify PT_WRITE_D with PT_READ_D handshake

- verify PT_IO with PIOD_READ_I and len = sizeof(uint8_t)

- verify PT_IO with PIOD_READ_I and len = sizeof(uint16_t)

- verify PT_IO with PIOD_READ_I and len = sizeof(uint32_t)

- verify PT_IO with PIOD_READ_I and len = sizeof(uint64_t)

- verify PT_READ_I called once

- verify PT_READ_I called twice

- verify PT_READ_I called three times

- verify PT_READ_I called four times

- verify plain PT_GETREGS call without further steps

- verify plain PT_GETREGS call and retrieve PC

- verify plain PT_GETREGS call and retrieve SP

- verify plain PT_GETREGS call and retrieve INTRV

- verify PT_GETREGS and PT_SETREGS calls without changing regs

- verify plain PT_GETFPREGS call without further steps

- verify PT_GETFPREGS and PT_SETFPREGS calls without changing regs

- verify single PT_STEP call

- verify PT_STEP called twice

- verify PT_STEP called three times

- verify PT_STEP called four times

- verify that PT_CONTINUE with SIGKILL terminates child

- verify that PT_KILL terminates child

- verify basic LWPINFO call for single thread (PT_TRACE_ME)

- verify basic LWPINFO call for single thread (PT_ATTACH from tracer)

3. Documentation of ptrace(2)

- documented PT_SET_EVENT_MASK, PT_GET_EVENT_MASK and PT_GET_PROCESS_STATE

- updated and fixed documentation of PT_DUMPCORE

- documented PT_GETXMMREGS and PT_SETXMMREGS (i386 port specific)

- documented PT_GETVECREGS and PT_SETVECREGS (ppc ports specific)

- other tweaks and cleanups in the documentation

4. exect(3) - execve(2) wrapper with tracing capabilities

Researched its usability and added ATF test, it's close to be marked for removal - it's marked as broken as it is on all ports... this call was inherited from BSD4.2 (VAX) and was never useful since the inception as it is enabling singlestepping before calling execve(2) and tracing libc calls before switching to new process image.

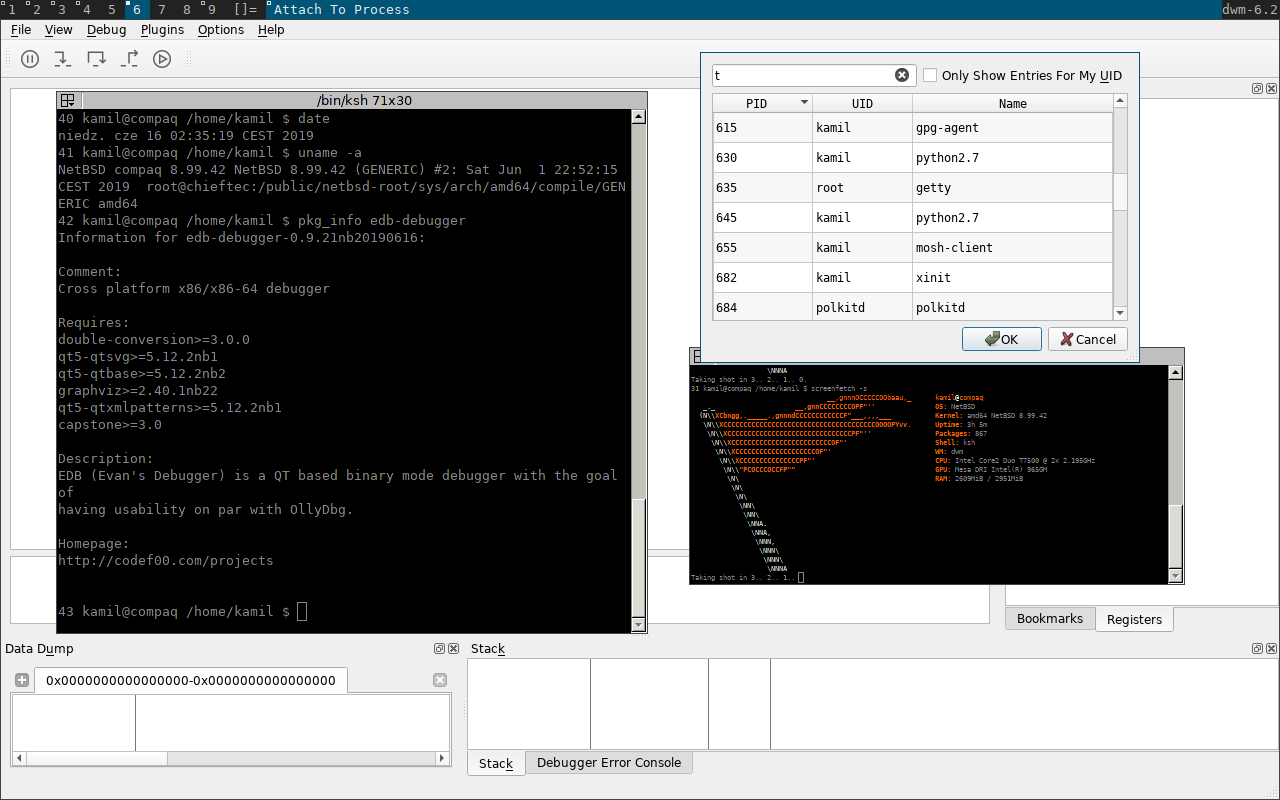

5. pthread_dbg(3) - POSIX threads debugging library documentation

- added documentation for the library in man-page

- upstreamed to the mandoc project to recognize the pthread_dbg(3) library

- document td_close(3) close connection to a threaded process

- document td_map_pth2thr(3) convert a pthread_t to a thread handle

- document td_open(3) make connection to a threaded process

- document td_thr_getname(3) get the user-assigned name of a thread

- document td_thr_info(3) get information on a thread

- document td_thr_iter(3) iterate over the threads in the process

6. pthread_dbg(3) - pthread debug library - t_dummy tests

- assert that dummy lookup functions stops td_open(3)

- assert that td_open(3) for basic proc_{read,write,lookup} works

- asserts that calling td_open(3) twice for the same process fails

7. pthread_dbg(3) - pthread debug library - test of features

- assert that td_thr_iter(3) call without extra logic works

- assert that td_thr_iter(3) call is executed for each thread once

- assert that for each td_thr_iter(3) call td_thr_info(3) is valid

- assert that for each td_thr_iter(3) call td_thr_getname(3) is valid

- assert that td_thr_getname(3) handles shorter buffer parameter and the result is properly truncated

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- and assert earlier that td_thr_iter(3) call is valid

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- and assert later that td_thr_iter() call is valid

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- compare thread's name of pthread_t and td_thread_t

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- assert that thread is in the TD_STATE_RUNNING state

8. pthread_dbg(3) - pthread debug library - code fixes

- fix pt_magic (part of pthread_t) read in td_thr_info(3)

- correct pt_magic reads in td_thr_{getname,suspend,resume}(3)

- fix pt_magic read in td_map_pth2thr(3)

- always set trailing '\0' in td_thr_getname(3) to compose valid ASCIIZ string

- kill SA thread states (TD_STATE_*) in pthread_dbg and add TD_STATE_DEAD

- obsolete thread_type in td_thread_info_st in pthread_dbg.h

This library was designed for Scheduler Activation, and this feature was removed in NetBSD 5.0.

9. wait(2) family tests

- test that wait6(2) handled stopped/continued process loop

- test whether wait(2)-family of functions return error and set ECHILD for lack of children

- test whether wait(2)-family of functions return error and set ECHILD for lack of children, with WNOHANG option verifying that error is still signaled and errno set

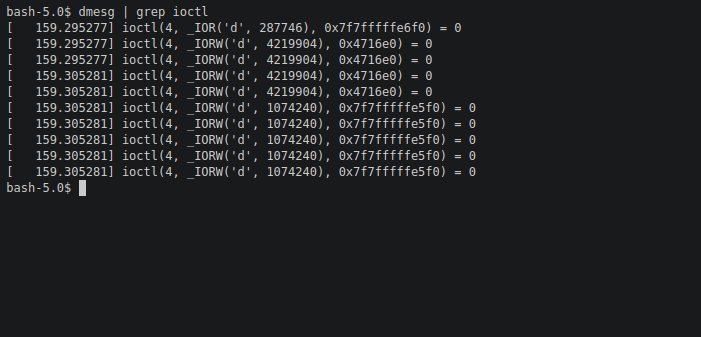

10. Debug Registers assisted watchpoints:

- fix rdr6() function on amd64

- add accessors for available x86 Debug Registers (DR0-DR3, DR6, DR7)

- switch x86 CPU Debug Register types from vaddr_t to register_t

- torn down KSTACK_CHECK_DR0, i386-only feature to detect stack overflow

12. i386 port tests

- call PT_GETREGS and iterate over General Purpose registers

13. amd64 port tests

- call PT_GETREGS and iterate over General Purpose registers

- call PT_COUNT_WATCHPOINTS and assert four available watchpoints

- call PT_COUNT_WATCHPOINTS and assert four available watchpoints, verify that we can read these watchpoints

- call PT_COUNT_WATCHPOINTS and assert four available watchpoints, verify that we can read and write unmodified these watchpoints

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 0

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 1

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 2

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 3

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 0

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 1

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 2

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 3

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 0

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 0 on data read

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 1 on data read

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 2 on data read

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 3 on data read

14. Other minor improvements

- document in wtf(7) PCB process control block

- fix cpu_switchto(9) prototype in a comment in RICSV

15. Add support for hardware assisted watchpoints/breakpoints API in ptrace(2)

Add new ptrace(2) calls:- PT_COUNT_WATCHPOINTS - count the number of available hardware watchpoints

- PT_READ_WATCHPOINT - read struct ptrace_watchpoint from the kernel state

- PT_WRITE_WATCHPOINT - write new struct ptrace_watchpoint state, this includes enabling and disabling watchpoints

typedef struct ptrace_watchpoint {

int pw_index; /* HW Watchpoint ID (count from 0) */

lwpid_t pw_lwpid; /* LWP described */

struct mdpw pw_md; /* MD fields */

} ptrace_watchpoint_t;

For example amd64 defines MD as follows:

struct mdpw {

void *md_address;

int md_condition;

int md_length;

};

These calls are protected with the __HAVE_PTRACE_WATCHPOINTS guard.

Tested on amd64, initial support added for i386 and XEN.

16. Reported bugs:

- PR kern/51596 (ptrace(2): raising SIGCONT in a child results with WIFCONTINUED and WIFSTOPPED true in the parent)

- PR kern/51600 (Tracer must detect zombie before child's parent)

- PR standards/51603 WIFCONTINUED()=true always implies WIFSTOPPED()=true

- PR standards/51606 wait(2) with WNOHANG does not return errno ECHILD for lack of children

- PR kern/51621: PT_ATTACH from a parent is unreliable [false positive]

- PR kern/51624: Tracee process cannot see its appropriate parent when debugged by a tracer

- PR kern/51630 ptrace(2) command PT_SET_EVENT_MASK: option PTRACE_VFORK unsupported

- PR lib/51633 tests/lib/libpthread_dbg/t_dummy unreliable [false positive]

- PR lib/51635 td_thr_iter in seems broken [false positive]

- PR lib/51636: It's not possible to run under gdb(1) programs using the pthread_dbg library

- PR kern/51649: PRIxREGISTER and PTRACE_REG_* mismatch

- PR kern/51685 (ptrace(2): Signal does not set PL_EVENT_SIGNAL in (struct ptrace_lwpinfo.)pl_event)

- PR port-amd64/51700 exect(3) misdesigned and hangs

.... and several critical ones not reported and fixed directly by the NetBSD team.

Credit for Christos Zoulas and K. Robert Elz for helping with the mentioned bugs.

17. Added doc/TODO.ptrace entries

- verify ppid of core dump generated with PT_DUMPCORE it must point to the real parent, not tracer

- adapt OpenBSD regress test (regress/sys/ptrace/ptrace.c) for the ATF context

- add new ptrace(2) calls to lock (suspend) and unlock LWP within a process

- add PT_DUMPCORE tests in the ATF framework

- add ATF tests for PT_WRITE_I and PIOD_WRITE_I - test mprotect restrictions

- add ATF tests for PIOD_READ_AUXV

- add tests for the procfs interface covering all functions available on the same level as ptrace(2)

- add support for PT_STEP, PT_GETREGS, PT_SETREGS, PT_GETFPREGS, PT_SETFPREGS in all ports

- integrate all ptrace(2) features in gdb

- add ptrace(2) NetBSD support in LLDB

- add support for detecting equivalent events to PTRACE_O_TRACEEXEC, PTRACE_O_TRACECLONE, PTRACE_O_TRACEEXIT from Linux

- exect(3) rething or remove -- maybe PT_TRACE_ME + PTRACE_O_TRACEEXEC?

- refactor pthread_dbg(3) to only query private pthread_t data, otherwise it duplicates ptrace(2) interface and cannot cover all types of threads

Features in ELF, DWARF, CTF, DTrace are out of scope of the above list.

Plan for the coming weeks

My initial goal is to copy the Linux Process Plugin and add minimal functional support for NetBSD in LLDB. It will be followed with running and passing some tests from the lldb-server test-suite.

This is a shift from the original plan about porting FreeBSD Process Plugin to NetBSD, as the FreeBSD one is lacking remote debugging support and it needs to be redone from scratch.

I'm going to fork wip/lldb-git (as for git snapshot from 16 Dec 2016) for the purpose of this task to wip/lldb-netbsd and work there.

Next steps after finishing this task are to sync up with Pavel from the LLDB team after New Year. The NetBSD Process Plugin will be used as a reference to create new Common Process Plugin shared between Linux and (Net)BSD.

This work was sponsored by The NetBSD Foundation.

The NetBSD Foundation is a non-profit organization and welcomes any donations to help us continue to fund projects and services to the open-source community. Please consider visiting the following URL, and chip in what you can:

What has been done

1. Verified basic ptrace(2) functionality:

- debugger cannot attach to PID0 (as root and user)

- debugger cannot attach to PID1 (as user)

- debugger cannot attach to PID1 with sercurelevel >= 1 (as root and user)

- debugger cannot attach to self

- debugger cannot attach to another process unless the process's root directory is at or below the tracing process's root

2. Verified the full matrix of combinations of wait(2) and ptrace(2) in the following test-cases

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP followed by _exit(2)

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP followed by _exit(2), with perent sending SIGINT and catching this singal only once with a signal handler and without termination of tracee

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP, with perent sending SIGINT and terminating the child without signal handler

- tracee can emit PT_TRACE_ME for parent and raise SIGSTOP, with perent sending SIGINT and terminating the child without signal handler

- tracee can emit PT_TRACE_ME for parent and raise SIGCONT, and parent reports it as process stopped

- assert that tracer sees process termination earlier than the parent

- assert that any tracer sees process termination earlier than its parent

- assert that tracer parent can PT_ATTACH to its child

- assert that tracer child can PT_ATTACH to its parent

- assert that tracer sees its parent when attached to tracer (check getppid(2))

- assert that tracer sees its parent when attached to tracer (check sysctl(7) and struct kinfo_proc2)

- assert that tracer sees its parent when attached to tracer (check /proc/curproc/status 3rd column)

- verify that empty EVENT_MASK is preserved

- verify that PTRACE_FORK in EVENT_MASK is preserved

- verify that fork(2) is intercepted by ptrace(2) with EVENT_MASK set to PTRACE_FORK

- verify that fork(2) is not intercepted by ptrace(2) with empty EVENT_MASK

- verify that vfork(2) is intercepted by ptrace(2) with EVENT_MASK set to PTRACE_VFORK [currently EVENT_VFORK not implemented]

- verify that vfork(2) is not intercepted by ptrace(2) with empty EVENT_MASK [currently failing as EVENT_VFORK not implemented]

- verify PT_IO with PIOD_READ_D and len = sizeof(uint8_t)

- verify PT_IO with PIOD_READ_D and len = sizeof(uint16_t)

- verify PT_IO with PIOD_READ_D and len = sizeof(uint32_t)

- verify PT_IO with PIOD_READ_D and len = sizeof(uint64_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint8_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint16_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint32_t)

- verify PT_IO with PIOD_WRITE_D and len = sizeof(uint64_t)

- verify PT_READ_D called once

- verify PT_READ_D called twice

- verify PT_READ_D called three times

- verify PT_READ_D called four times

- verify PT_WRITE_D called once

- verify PT_WRITE_D called twice

- verify PT_WRITE_D called three times

- verify PT_WRITE_D called four times

- verify PT_IO with PIOD_READ_D and PIOD_WRITE_D handshake

- verify PT_IO with PIOD_WRITE_D and PIOD_READ_D handshake

- verify PT_READ_D with PT_WRITE_D handshake

- verify PT_WRITE_D with PT_READ_D handshake

- verify PT_IO with PIOD_READ_I and len = sizeof(uint8_t)

- verify PT_IO with PIOD_READ_I and len = sizeof(uint16_t)

- verify PT_IO with PIOD_READ_I and len = sizeof(uint32_t)

- verify PT_IO with PIOD_READ_I and len = sizeof(uint64_t)

- verify PT_READ_I called once

- verify PT_READ_I called twice

- verify PT_READ_I called three times

- verify PT_READ_I called four times

- verify plain PT_GETREGS call without further steps

- verify plain PT_GETREGS call and retrieve PC

- verify plain PT_GETREGS call and retrieve SP

- verify plain PT_GETREGS call and retrieve INTRV

- verify PT_GETREGS and PT_SETREGS calls without changing regs

- verify plain PT_GETFPREGS call without further steps

- verify PT_GETFPREGS and PT_SETFPREGS calls without changing regs

- verify single PT_STEP call

- verify PT_STEP called twice

- verify PT_STEP called three times

- verify PT_STEP called four times

- verify that PT_CONTINUE with SIGKILL terminates child

- verify that PT_KILL terminates child

- verify basic LWPINFO call for single thread (PT_TRACE_ME)

- verify basic LWPINFO call for single thread (PT_ATTACH from tracer)

3. Documentation of ptrace(2)

- documented PT_SET_EVENT_MASK, PT_GET_EVENT_MASK and PT_GET_PROCESS_STATE

- updated and fixed documentation of PT_DUMPCORE

- documented PT_GETXMMREGS and PT_SETXMMREGS (i386 port specific)

- documented PT_GETVECREGS and PT_SETVECREGS (ppc ports specific)

- other tweaks and cleanups in the documentation

4. exect(3) - execve(2) wrapper with tracing capabilities

Researched its usability and added ATF test, it's close to be marked for removal - it's marked as broken as it is on all ports... this call was inherited from BSD4.2 (VAX) and was never useful since the inception as it is enabling singlestepping before calling execve(2) and tracing libc calls before switching to new process image.

5. pthread_dbg(3) - POSIX threads debugging library documentation

- added documentation for the library in man-page

- upstreamed to the mandoc project to recognize the pthread_dbg(3) library

- document td_close(3) close connection to a threaded process

- document td_map_pth2thr(3) convert a pthread_t to a thread handle

- document td_open(3) make connection to a threaded process

- document td_thr_getname(3) get the user-assigned name of a thread

- document td_thr_info(3) get information on a thread

- document td_thr_iter(3) iterate over the threads in the process

6. pthread_dbg(3) - pthread debug library - t_dummy tests

- assert that dummy lookup functions stops td_open(3)

- assert that td_open(3) for basic proc_{read,write,lookup} works

- asserts that calling td_open(3) twice for the same process fails

7. pthread_dbg(3) - pthread debug library - test of features

- assert that td_thr_iter(3) call without extra logic works

- assert that td_thr_iter(3) call is executed for each thread once

- assert that for each td_thr_iter(3) call td_thr_info(3) is valid

- assert that for each td_thr_iter(3) call td_thr_getname(3) is valid

- assert that td_thr_getname(3) handles shorter buffer parameter and the result is properly truncated

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- and assert earlier that td_thr_iter(3) call is valid

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- and assert later that td_thr_iter() call is valid

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- compare thread's name of pthread_t and td_thread_t

- assert that pthread_t can be translated with td_map_pth2thr(3) to td_thread_t -- assert that thread is in the TD_STATE_RUNNING state

8. pthread_dbg(3) - pthread debug library - code fixes

- fix pt_magic (part of pthread_t) read in td_thr_info(3)

- correct pt_magic reads in td_thr_{getname,suspend,resume}(3)

- fix pt_magic read in td_map_pth2thr(3)

- always set trailing '\0' in td_thr_getname(3) to compose valid ASCIIZ string

- kill SA thread states (TD_STATE_*) in pthread_dbg and add TD_STATE_DEAD

- obsolete thread_type in td_thread_info_st in pthread_dbg.h

This library was designed for Scheduler Activation, and this feature was removed in NetBSD 5.0.

9. wait(2) family tests

- test that wait6(2) handled stopped/continued process loop

- test whether wait(2)-family of functions return error and set ECHILD for lack of children

- test whether wait(2)-family of functions return error and set ECHILD for lack of children, with WNOHANG option verifying that error is still signaled and errno set

10. Debug Registers assisted watchpoints:

- fix rdr6() function on amd64

- add accessors for available x86 Debug Registers (DR0-DR3, DR6, DR7)

- switch x86 CPU Debug Register types from vaddr_t to register_t

- torn down KSTACK_CHECK_DR0, i386-only feature to detect stack overflow

12. i386 port tests

- call PT_GETREGS and iterate over General Purpose registers

13. amd64 port tests

- call PT_GETREGS and iterate over General Purpose registers

- call PT_COUNT_WATCHPOINTS and assert four available watchpoints

- call PT_COUNT_WATCHPOINTS and assert four available watchpoints, verify that we can read these watchpoints

- call PT_COUNT_WATCHPOINTS and assert four available watchpoints, verify that we can read and write unmodified these watchpoints

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 0

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 1

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 2

- call PT_COUNT_WATCHPOINTS and test code trap with watchpoint 3

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 0

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 1

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 2

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 3

- call PT_COUNT_WATCHPOINTS and test write trap with watchpoint 0

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 0 on data read

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 1 on data read

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 2 on data read

- call PT_COUNT_WATCHPOINTS and test rw trap with watchpoint 3 on data read

14. Other minor improvements

- document in wtf(7) PCB process control block

- fix cpu_switchto(9) prototype in a comment in RICSV

15. Add support for hardware assisted watchpoints/breakpoints API in ptrace(2)

Add new ptrace(2) calls:- PT_COUNT_WATCHPOINTS - count the number of available hardware watchpoints

- PT_READ_WATCHPOINT - read struct ptrace_watchpoint from the kernel state

- PT_WRITE_WATCHPOINT - write new struct ptrace_watchpoint state, this includes enabling and disabling watchpoints

typedef struct ptrace_watchpoint {

int pw_index; /* HW Watchpoint ID (count from 0) */

lwpid_t pw_lwpid; /* LWP described */

struct mdpw pw_md; /* MD fields */

} ptrace_watchpoint_t;

For example amd64 defines MD as follows:

struct mdpw {

void *md_address;

int md_condition;

int md_length;

};

These calls are protected with the __HAVE_PTRACE_WATCHPOINTS guard.

Tested on amd64, initial support added for i386 and XEN.

16. Reported bugs:

- PR kern/51596 (ptrace(2): raising SIGCONT in a child results with WIFCONTINUED and WIFSTOPPED true in the parent)

- PR kern/51600 (Tracer must detect zombie before child's parent)

- PR standards/51603 WIFCONTINUED()=true always implies WIFSTOPPED()=true

- PR standards/51606 wait(2) with WNOHANG does not return errno ECHILD for lack of children

- PR kern/51621: PT_ATTACH from a parent is unreliable [false positive]

- PR kern/51624: Tracee process cannot see its appropriate parent when debugged by a tracer

- PR kern/51630 ptrace(2) command PT_SET_EVENT_MASK: option PTRACE_VFORK unsupported

- PR lib/51633 tests/lib/libpthread_dbg/t_dummy unreliable [false positive]

- PR lib/51635 td_thr_iter in seems broken [false positive]

- PR lib/51636: It's not possible to run under gdb(1) programs using the pthread_dbg library

- PR kern/51649: PRIxREGISTER and PTRACE_REG_* mismatch

- PR kern/51685 (ptrace(2): Signal does not set PL_EVENT_SIGNAL in (struct ptrace_lwpinfo.)pl_event)

- PR port-amd64/51700 exect(3) misdesigned and hangs

.... and several critical ones not reported and fixed directly by the NetBSD team.

Credit for Christos Zoulas and K. Robert Elz for helping with the mentioned bugs.

17. Added doc/TODO.ptrace entries

- verify ppid of core dump generated with PT_DUMPCORE it must point to the real parent, not tracer

- adapt OpenBSD regress test (regress/sys/ptrace/ptrace.c) for the ATF context

- add new ptrace(2) calls to lock (suspend) and unlock LWP within a process

- add PT_DUMPCORE tests in the ATF framework

- add ATF tests for PT_WRITE_I and PIOD_WRITE_I - test mprotect restrictions

- add ATF tests for PIOD_READ_AUXV

- add tests for the procfs interface covering all functions available on the same level as ptrace(2)

- add support for PT_STEP, PT_GETREGS, PT_SETREGS, PT_GETFPREGS, PT_SETFPREGS in all ports

- integrate all ptrace(2) features in gdb

- add ptrace(2) NetBSD support in LLDB

- add support for detecting equivalent events to PTRACE_O_TRACEEXEC, PTRACE_O_TRACECLONE, PTRACE_O_TRACEEXIT from Linux

- exect(3) rething or remove -- maybe PT_TRACE_ME + PTRACE_O_TRACEEXEC?

- refactor pthread_dbg(3) to only query private pthread_t data, otherwise it duplicates ptrace(2) interface and cannot cover all types of threads

Features in ELF, DWARF, CTF, DTrace are out of scope of the above list.

Plan for the coming weeks

My initial goal is to copy the Linux Process Plugin and add minimal functional support for NetBSD in LLDB. It will be followed with running and passing some tests from the lldb-server test-suite.

This is a shift from the original plan about porting FreeBSD Process Plugin to NetBSD, as the FreeBSD one is lacking remote debugging support and it needs to be redone from scratch.

I'm going to fork wip/lldb-git (as for git snapshot from 16 Dec 2016) for the purpose of this task to wip/lldb-netbsd and work there.

Next steps after finishing this task are to sync up with Pavel from the LLDB team after New Year. The NetBSD Process Plugin will be used as a reference to create new Common Process Plugin shared between Linux and (Net)BSD.

This work was sponsored by The NetBSD Foundation.

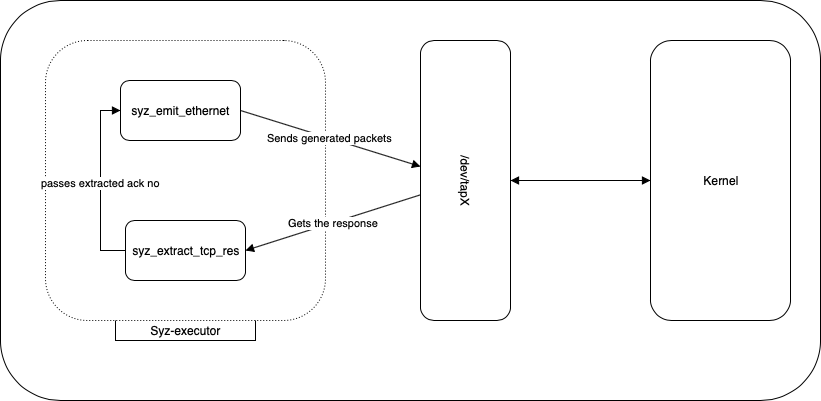

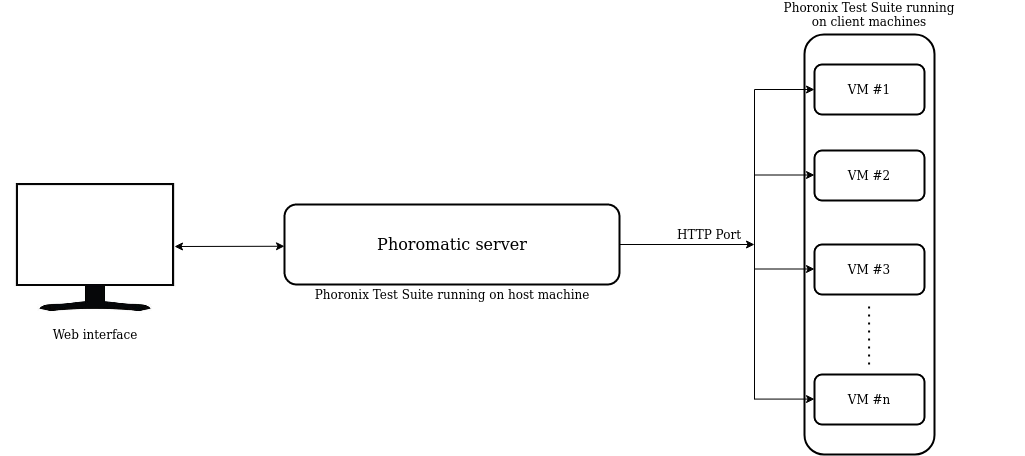

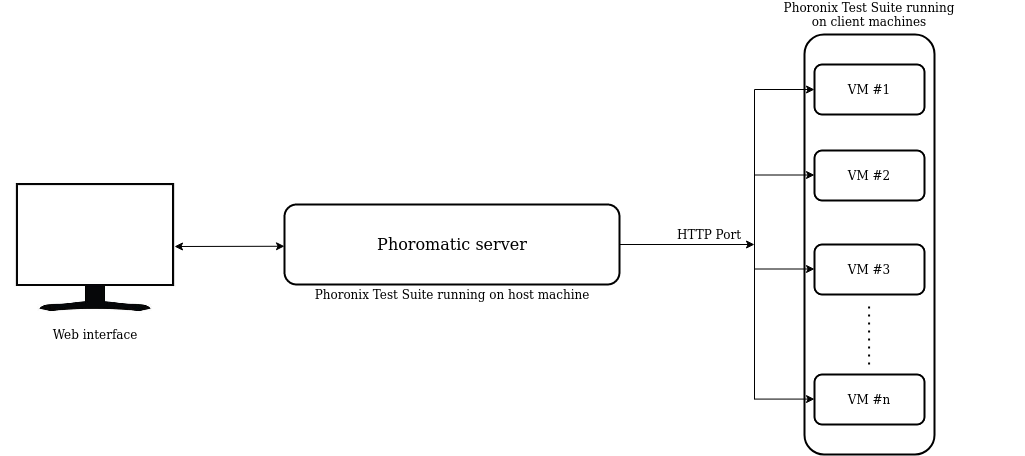

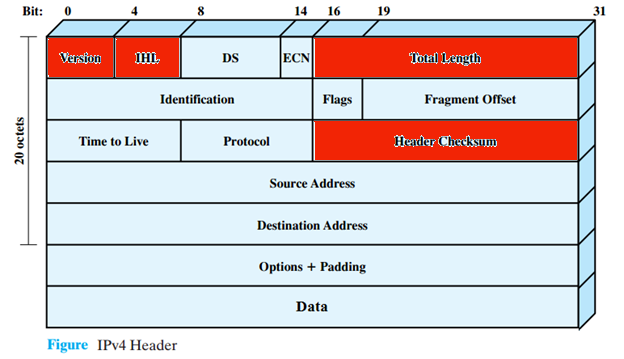

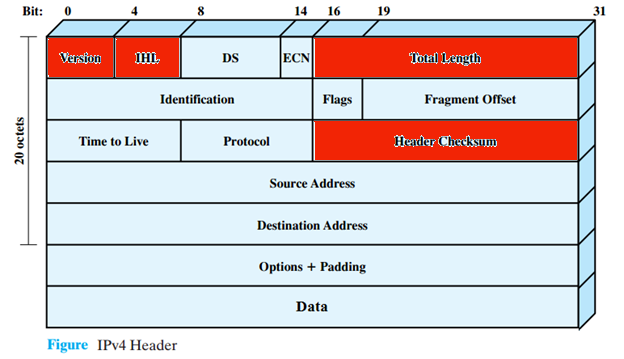

The NetBSD Foundation is a non-profit organization and welcomes any donations to help us continue to fund projects and services to the open-source community. Please consider visiting the following URL, and chip in what you can:A cyclic trend in operating systems is moving things in and out of the kernel for better performance. Currently, the pendulum is swinging in the direction of userspace being the locus of high performance. The anykernel architecture of NetBSD ensures that the same kernel drivers work in a monolithic kernel, userspace and beyond. One of those driver stacks is networking. In this article we assume that the NetBSD networking stack is run outside of the monolithic kernel in a rump kernel and survey the open source interface layer options.

There are two sub-aspects to networking. The first facet is supporting network protocols and suites such as IPv6, IPSec and MPLS. The second facet is delivering packets to and from the protocol stack, commonly referred to as the interface layer. While the first facet for rump kernels is unchanged from the networking stack running in a monolithic NetBSD kernel, there is support for a number of interfaces not available in kernel mode.

DPDK

The Data Plane Development Kit is meant to be used for high-performance, multiprocessor-aware networking. DPDK offers network access by attaching to hardware and providing a hardware-independent API for sending and receiving packets. The most common runtime environment for DPDK is Linux userspace, where a UIO userspace driver framework kernel module is used to enable access to PCI hardware. The NIC drivers themselves are provided by DPDK and run in application processes.

For high performance, DPDK uses a run-to-completion scheduling model -- the same model is used by rump kernels. This scheduling model means that NIC devices are accessed in polled mode without any interrupts on the fast path. The only interrupts that are used by DPDK are for slow-path operations such as notifications of link status change.

The rump kernel interface driver for DPDK is available here. DPDK itself is described in significant detail in the documents available from the Intel DPDK page.

netmap

Like DPDK, netmap offers user processes access to NIC hardware with a high-performance userspace packet processing intent. Unlike DPDK, netmap reuses NIC drivers from the host kernel and provides memory-mapped buffer rings for accessing the device packet queues. In other words, the device drivers still remain in the host kernel, but low-level and low-overhead access to hardware is made available to userspace processes. In addition to the memory-mapping of buffers, netmap uses other performance optimization methods such as batch processing and buffer reallocation, and can easily saturate a 10GigE with minimum-size frames. Another significant difference to DPDK is that netmap allows also for a blocking mode of operation.

Netmap is coupled with a high-performance software virtual switch called VALE. It can be used to interconnect networks between virtual machines and processes such as rump kernels. The netmap API is used also by VALE, so VALE switching can be used with the rump kernel driver for netmap.

The rump kernel interface driver for netmap is available here. Multiple papers describing netmap and VALE are available from the netmap page.

TAP

A tap device injects packets written into a device node, e.g. /dev/tap, to a tap virtual network interface. Conversely, packets received by the virtual tap network can be read from the device node. The tap network interface can be bridged with other network interfaces to provide further network access. While indirect access to network hardware via the bridge is not maximally efficient, it is not hideously slow either: a rump kernel backed by a tap device can saturate a gigabit Ethernet. The advantage of the tap device is portability, as it is widely available on Unix-type systems. Tap interfaces also virtualize nicely, and most operating systems will allow unprivileged processes to use tap interface as long as the processes have the credentials to access the respective device nodes.

The tap device was the original method for accessing with a rump kernel. In fact, the in-kernel side of the rump kernel network driver was rather short-sightedly named virt back in 2008. The virt driver and the associated hypercalls are available in the NetBSD tree. Fun fact: the tap driver is also the method for packet shovelling when running the NetBSD TCP/IP stack in the Linux kernel; the rationale is provided in a comment here and also by running wc -l.

Xen hypercalls

After a fashion, using Xen hypercalls is a variant of using the TAP device: a virtualized network resource is accessed using high-level hypercalls. However, instead of accessing the network backend from a device node, Xen hypercalls are used. The Xen driver is limited to the Xen environment and is available here.

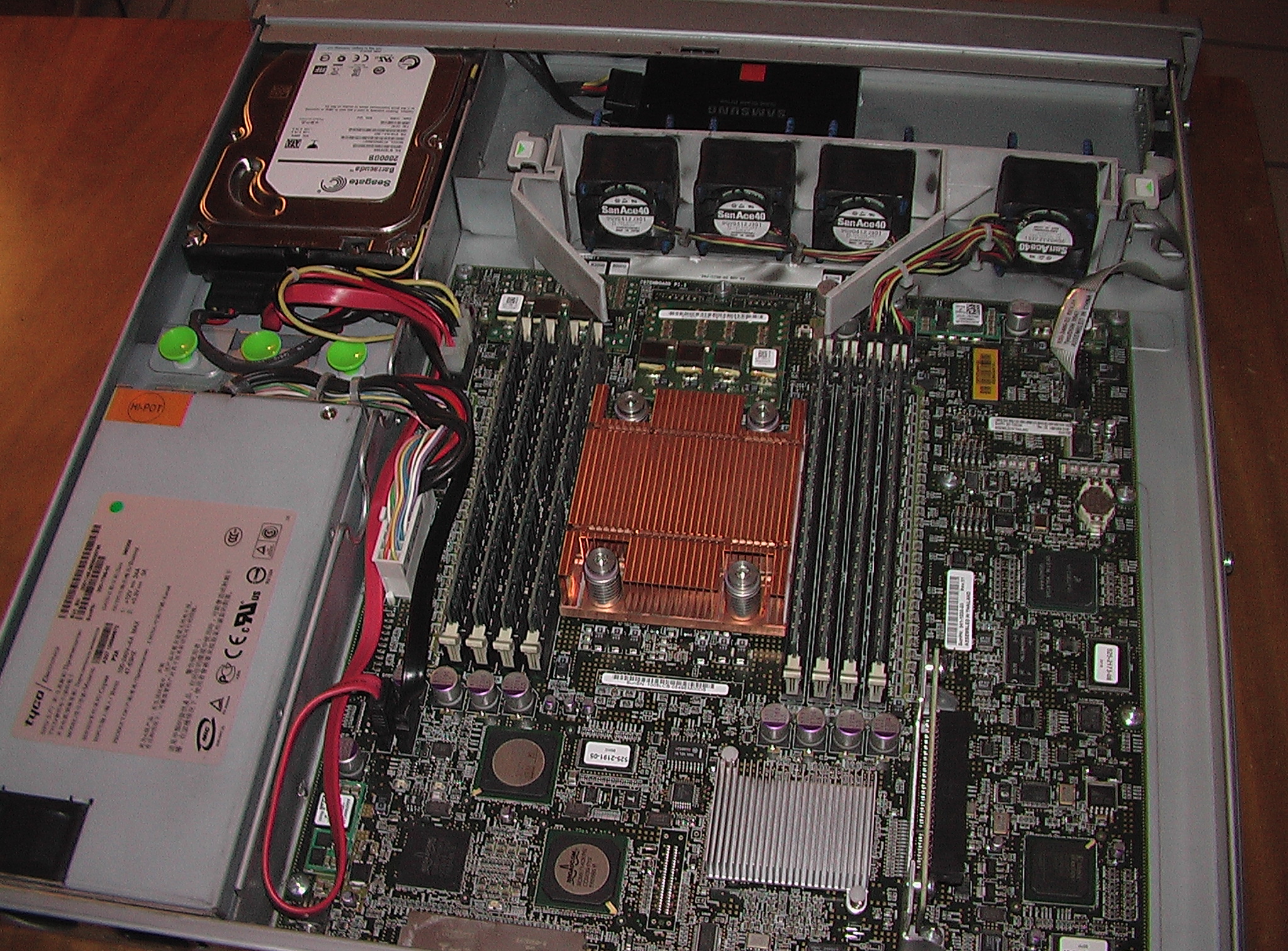

NetBSD PCI NIC drivers

The previous examples we have discussed use a high-level interface to packet I/O functions. For example, to send a packet, the rump kernel will issue a hypercall which essentially says "transmit these data", and the network backend handles the request. When using NetBSD PCI drivers, the hypercalls work at a low level, and deal with for example reading/writing the PCI configuration space and mapping the device memory space into the rump kernel. As a result, using NetBSD PCI device drivers in a rump kernel work exactly like in a regular kernel: the PCI devices are probed during rump kernel bootstrap, relevant drivers are attached, and packet shovelling works by the drivers fiddling the relevant device registers.

The hypercall interfaces and necessary kernel-side implementations are currently hosted in the repository providing Xen support for rump kernels. Strictly speaking, there is nothing specific to Xen in these bits, and they will most likely be moved out of the Xen repository once PCI device driver support for other planned platforms, such as Linux userspace, is completed. The hypercall implementations, which are Xen specific, are available here.

shmif

For testing networking, it is advantageous to have an interface which can communicate with other networking stacks on the same host without requiring elevated privileges, special kernel features or a priori setup in the form of e.g. a daemon process. These requirements are filled by shmif, which uses file-backed shared memory as a bus for Ethernet frames. Each interface attaches to a pathname, and interfaces attached to the same pathname see the the same traffic.

The shmif driver is available in the NetBSD tree.

We presented a total of six open source network backends for networking with rump kernels. These backends represent four different methodologies:

- DPDK and netmap provide high-performance network hardware access using high-level hypercalls.

- TAP and Xen hypercall drivers provide access to virtualized network resources using high-level hypercalls.

- NetBSD PCI drivers access hardware directly using register-level device access to send and receive packets.

- shmif allows for unprivileged testing of the networking stack without relying on any special kernel drivers or global resources.

Choice is a good thing here, as the optimal backend ultimately depends on the characteristics of the application.

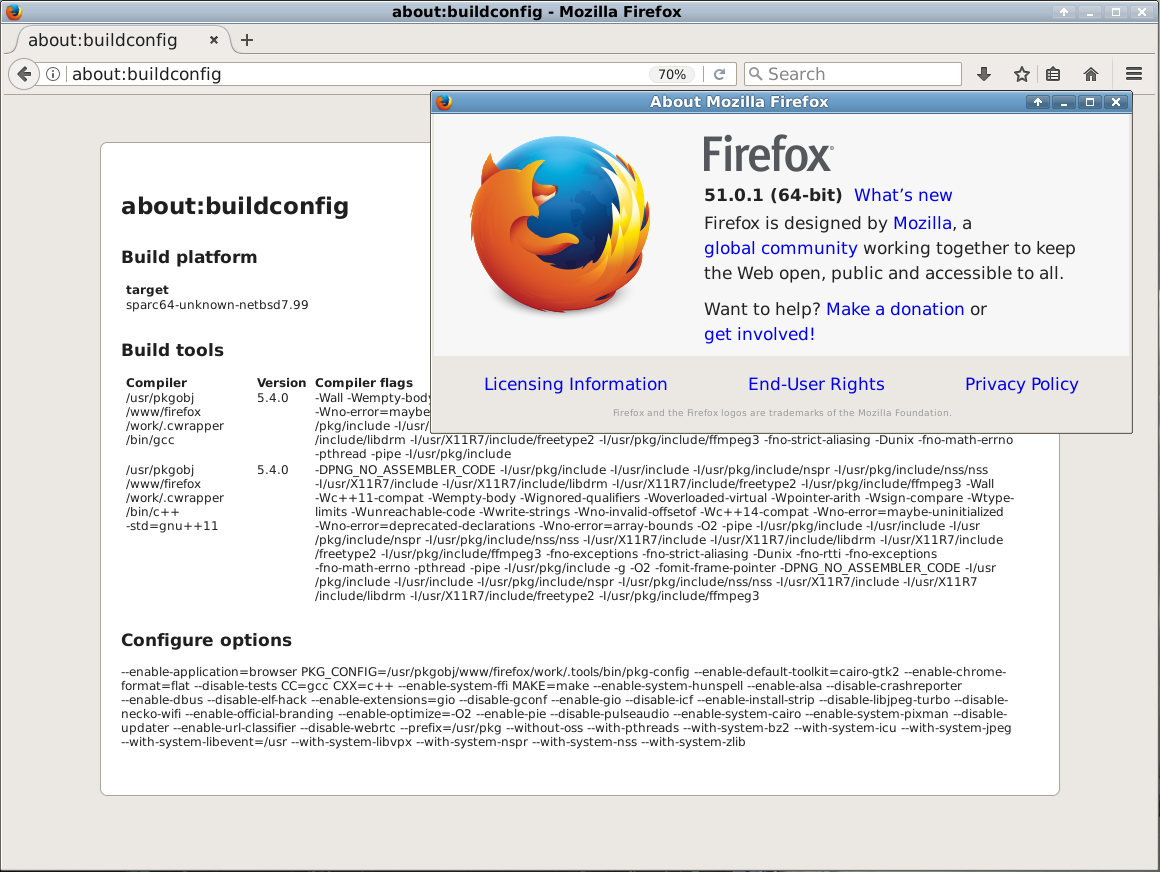

The advantages are better verifyability that the source code matches the binaries, thus addressing one of the many steps one has to check before trusting the software one runs.

We discussed various topics during the conference in small groups:

- technical aspects (how to achieve this, how to cooperate over distributions, ...)

- social aspects (how to argue for it with programmers, managers, lay people) financial aspects (how to get funding for such work)

- lots of other stuff

Making the base system reproducible: a big part of the work for this has already been done, but there a number of open issues, visible e.g. in Debian's regularly scheduled test builds, up to the fact that this is not the default yet.

Making pkgsrc reproducible: This will be a huge task, since pkgsrc targets so many and diverse platforms. On the other hand, we have a very good framework below that that should help.

For giggles, I've compared the binary packages for png built on 7.99.22 and 7.99.23 (in my chrooted pbulk only though) and found that most differences were indeed only timestamps. So there's probably a lot of low-hanging fruit in this area as well.

If you want to help, here are some ideas:

- fix the MKREPRO bugs (like PRs 48355, 48637, 48638, 50119, 50120, 50122)

- check https://reproducible.debian.net/netbsd/netbsd.html for more issues, or do your own tests

- discuss turning on MKREPRO by default

- starting working on reproducibility in pkgsrc:

- remove gzip time stamps from binary packages

- use a fixed time stamp for files inside binary packages (perhaps depending on newest file in sources, or latest change in pkgsrc files for the pkg)

- identify more of the issues, like how to get symbols ordered reproducible in binaries (look at shells/bash)

The list of supported multiprocessor boards currently is:

- Banana Pi (BPI)

- Cubieboard 2 (CUBIEBOARD)

- Cubietruck (CUBIETRUCK)

- Merrii Hummingbird A31 (HUMMINGBIRD_A31)

- CUBOX-I

- NITROGEN6X

Details how to create bootable media and various other information for the Allwinner boards can be found on the NetBSD/evbarm on Allwinner Technology SoCs wiki page.

The release engineering team is discussing how to bring all those changes into the netbsd-7 branch as well, so that we can call NetBSD 7.0 "the ARM SoC release".

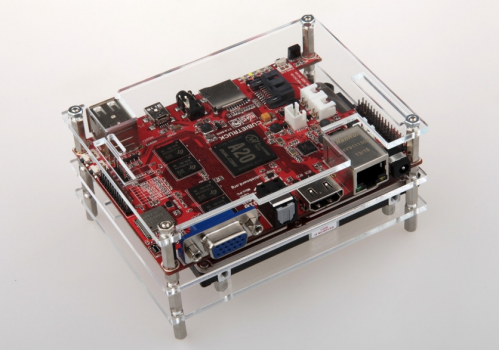

While multicore ARM chips are mostly known for being used in cell phones and tablet devices, there are also some nice "tiny PC" variants out there, like the CubieTruck, which originally comes with a small transparent case that allows piggybacking it onto a 2.5" hard disk:

Image from cubieboard.org under creative commons license.

This is nice to put next to your display, but a bit too tiny and fragile for my test lab - so I reused an old (originally mac68k cartridge) SCSI enclosure for mine:

Image by myself under creative commons license.

This machine is used to run regular tests for big endian  arm, the results are gathered here. Running it big-endian is just a way to trigger more bugs.

arm, the results are gathered here. Running it big-endian is just a way to trigger more bugs.

The last test run logged on the page is already done with an SMP kernel. No regressions were found so far, and the other bugs (sligtly more than 30 failures in the test run is way too much) will be addressed one by one.

Now happy multi-ARM-ing everyone, and I am looking forward to a great NetBSD 7.0 release!

The NetBSD Project is pleased to announce NetBSD 5.1.5, the fifth security/bugfix update of the NetBSD 5.1 release branch, and NetBSD 5.2.3, the third security/bugfix update of the NetBSD 5.2 release branch. They represent a selected subset of fixes deemed important for security or stability reasons, and if you are running a prior release of either branch, we strongly suggest that you update to one of these releases.

For more details, please see the NetBSD 5.1.5 release notes or NetBSD 5.2.3 release notes.

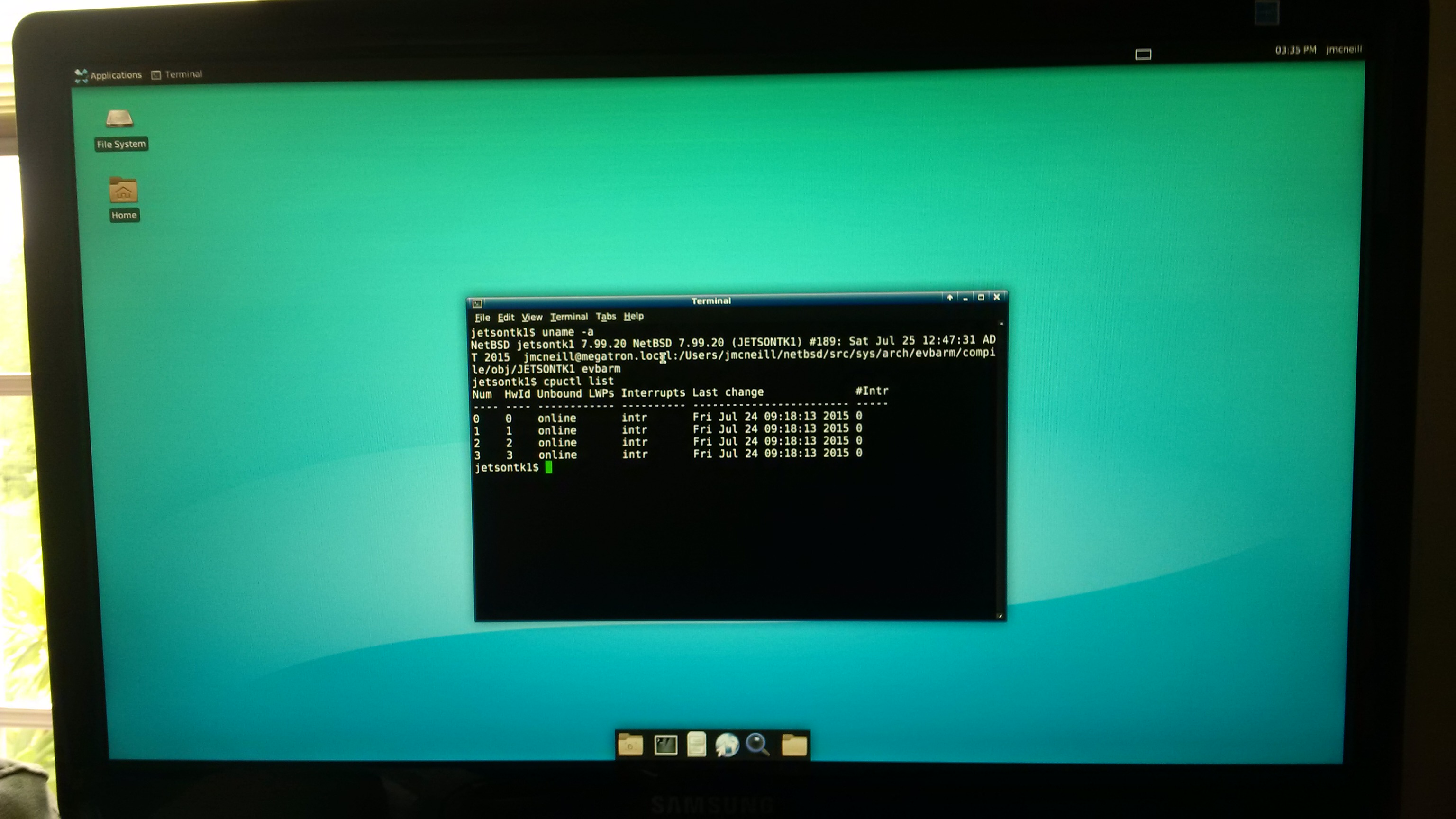

Complete source and binaries for NetBSD are available for download at many sites around the world. A list of download sites providing FTP, AnonCVS, SUP, and other services may be found at http://www.NetBSD.org/mirrors/.That is, make it load a kernel, identify / setup the CPU, attach a serial console. This is what it looks like:

U-Boot SPL 2013.10-rc3-g9329ab16a204 (Jun 26 2014 - 09:43:22)

SDRAM H5TQ2G83CFR initialization... done

U-Boot 2013.10-rc3-g9329ab16a204 (Jun 26 2014 - 09:43:22)

Board: ci20 (Ingenic XBurst JZ4780 SoC)

DRAM: 1 GiB

NAND: 8192 MiB

MMC: jz_mmc msc1: 0

In: eserial3

Out: eserial3

Err: eserial3

Net: dm9000

ci20# dhcp

ERROR: resetting DM9000 -> not responding

dm9000 i/o: 0xb6000000, id: 0x90000a46

DM9000: running in 8 bit mode

MAC: d0:31:10:ff:7e:89

operating at 100M full duplex mode

BOOTP broadcast 1

DHCP client bound to address 192.168.0.47

*** Warning: no boot file name; using 'C0A8002F.img'

Using dm9000 device

TFTP from server 192.168.0.44; our IP address is 192.168.0.47

Filename 'C0A8002F.img'.

Load address: 0x88000000

Loading: #################################################################

##############

284.2 KiB/s

done

Bytes transferred = 1146945 (118041 hex)

ci20# bootm

## Booting kernel from Legacy Image at 88000000 ...

Image Name: evbmips 7.99.1 (CI20)

Image Type: MIPS NetBSD Kernel Image (gzip compressed)

Data Size: 1146881 Bytes = 1.1 MiB

Load Address: 80020000

Entry Point: 80020000

Verifying Checksum ... OK

Uncompressing Kernel Image ... OK

subcommand not supported

ci20# g 80020000

## Starting applicatpmap_steal_memory: seg 0: 0x30c 0x30c 0xffff 0xffff

Loaded initial symtab at 0x802502d4, strtab at 0x80270cb4, # entries 8323

Copyright (c) 1996, 1997, 1998, 1999, 2000, 2001, 2002, 2003, 2004, 2005,

2006, 2007, 2008, 2009, 2010, 2011, 2012, 2013, 2014

The NetBSD Foundation, Inc. All rights reserved.

Copyright (c) 1982, 1986, 1989, 1991, 1993

The Regents of the University of California. All rights reserved.

NetBSD 7.99.1 (CI20) #113: Sat Nov 22 09:58:39 EST 2014

ml@blackbush:/home/build/obj_evbmips32/sys/arch/evbmips/compile/CI20

Ingenic XBurst

total memory = 1024 MB

avail memory = 1001 MB

kern.module.path=/stand/evbmips/7.99.1/modules

mainbus0 (root)

cpu0 at mainbus0: 1200.00MHz (hz cycles = 120000, delay divisor = 12)

cpu0: Ingenic XBurst (0x3ee1024f) Rev. 79 with unknown FPC type (0x330000) Rev. 0

cpu0: 32 TLB entries, 16MB max page size

cpu0: 32KB/32B 8-way set-associative L1 instruction cache

cpu0: 32KB/32B 8-way set-associative write-back L1 data cache

com0 at mainbus0: Ingenic UART, working fifo

com0: console

root device:

What works:

- CPU identification and setup

- serial console via UART0

- reset ( by provoking a watchdog timeout )

- basic timers - enough for delay(), since the CPUs don't have MIPS cycle counters

- dropping into ddb and poking around

What doesn't work (yet):

- interrupts

- everything else

Biggest obstacle - believe it or not, the serial port. The on-chip UARTs are mostly 16550 compatible. Mostly. The difference is one bit in the FIFO control register which, if not set, powers down the UART. So throwing data at the UART by hand worked but as soon as the com driver took over the line went dead. It took me a while to find that one.

FOSDEM 2014 will take place on 1–2 February, 2014, in Brussels, Belgium. Just like in the last years, there will be both a BSD booth and a developer's room (on Saturday).

The topics of the devroom include all BSD operating systems. Every talk is welcome, from internal hacker discussion to real-world examples and presentations about new and shiny features. The default duration for talks will be 45 minutes including discussion. Feel free to ask if you want to have a longer or a shorter slot.

If you already submitted a talk last time, please note that the procedure is slightly different.

To submit your proposal, visit

https://penta.fosdem.org/submission/FOSDEM14/

and follow the instructions to create an account and an “event”. Please select “BSD devroom” as the track. (Click on “Show all” in the top right corner to display the full form.)

Please include the following information in your submission:

- The title and subtitle of your talk (please be descriptive, as titles will be listed with ~500 from other projects)

- A short abstract of one paragraph

- A longer description if you wish to do so

- Links to related websites/blogs etc.

The deadline for submissions is December 20, 2013. The talk committee, consisting of Daniel Seuffert, Marius Nünnerich and Benny Siegert, will consider the proposals. If yours has been accepted, you will be informed by e-mail before the end of the year.

The NetBSD Project is pleased to announce NetBSD 7.0, the fifteenth major release of the NetBSD operating system.

This release brings stability improvements, hundreds of bug fixes, and many new features. Some highlights of the NetBSD 7.0 release are:

- DRM/KMS support brings accelerated graphics to x86 systems using modern Intel and Radeon devices.

- Multiprocessor ARM support.

- Support for many new ARM boards:

- Raspberry Pi 2

- ODROID-C1

- BeagleBoard, BeagleBone, BeagleBone Black

- MiraBox

- Allwinner A20, A31: Cubieboard2, Cubietruck, Banana Pi, etc.

- Freescale i.MX50, i.MX51: Kobo Touch, Netwalker

- Xilinx Zynq: Parallella, ZedBoard

- Major NPF improvements:

- BPF with just-in-time (JIT) compilation by default.

- Support for dynamic rules.

- Support for static (stateless) NAT.

- Support for IPv6-to-IPv6 Network Prefix Translation (NPTv6) as per RFC 6296.

- Support for CDB based tables (uses perfect hashing and guarantees lock-free O(1) lookups).

- Multiprocessor support in the USB subsystem.

- blacklistd(8), a new daemon that integrates with packet filters to dynamically protect other network daemons such as ssh, named, and ftpd from network break-in attempts.

- Numerous improvements in the handling of disk wedges (see dkctl(8) for information about wedges).

- GPT support in sysinst via the extended partitioning menu.

- Lua kernel scripting.

- epoc32, a new port which supports Psion EPOC PDAs.

- GCC 4.8.4, which brings support for C++11.

- Optional fully BSD-licensed C/C++ runtime env: compiler_rt, libc++, libcxxrt.

For a more complete list of changes in NetBSD 7.0, see the release notes.

Complete source and binaries for NetBSD 7.0 are available for download at many sites around the world. A list of download sites providing FTP, AnonCVS, and other services may be found at http://www.NetBSD.org/mirrors/.

If NetBSD makes your life better, please consider making a donation to The NetBSD Foundation in order to support the continued development of this fine operating system. As a non-profit organization with no commercial backing, The NetBSD Foundation depends on donations from its users. Your donation helps us fund large development projects, cover operating expenses, and keep the servers alive. For information about donating, visit http://www.NetBSD.org/donations/

In keeping with NetBSD's policy of supporting only the current (7.x) and next most recent (6.x) release majors, the release of NetBSD 7.0 marks the end of life for the 5.x branches. As in the past, a month of overlapping support is being provided in order to ease the migration to newer releases.

On November 9, the following branches will no longer be maintained:

- netbsd-5-2

- netbsd-5-1

- netbsd-5

Furthermore:

- There will be no more pullups to the branches (even for security issues)

- There will be no security advisories made for any of the 5.x releases

- The existing 5.x releases on ftp.NetBSD.org will be moved into /pub/NetBSD-archive/

We hope 7.0 serves you well!

Tutorials

I came to Paris on Wednesday in order to participate in tutorials:

- Thursday - DTrace for Developers: no more printfs - by George Neville-Neil

- Friday - How to untangle your threads from a giant lock in a multiprocessor system - by Taylor R. Campbell

The NetBSD Summit

On Friday there was a NetBSD summit that gathered around 15 developers. I have not participated in it myself because of the tutorial on SMP. There were three presentations:

- News from the version control front - by joerg,

- Scripting DDB with Forth - by uwe,

- NetBSD on Google Compute Engine - by bsiegert.

Talks

During Saturday and Sunday there were several NetBSD and pkgsrc related presentations:

- A Modern Replacement for BSD spell(1) - Abhinav Upadhyay

- Portable Hotplugging: NetBSD's uvm_hotplug(9) API development - Cherry G. Mathew

- Hardening pkgsrc - Pierre Pronchery

- Reproducible builds on NetBSD - Christos Zoulas

- The school of hard knocks - PT1 - Sevan Janiyan

- The LLDB Debugger on NetBSD - Kamil Rytarowski

- What's in store for NetBSD 8.0? - Alistair Crooks

During the closing ceremony there was a speech by Alistair Crooks on behalf of The NetBSD Foundation.

LLDB

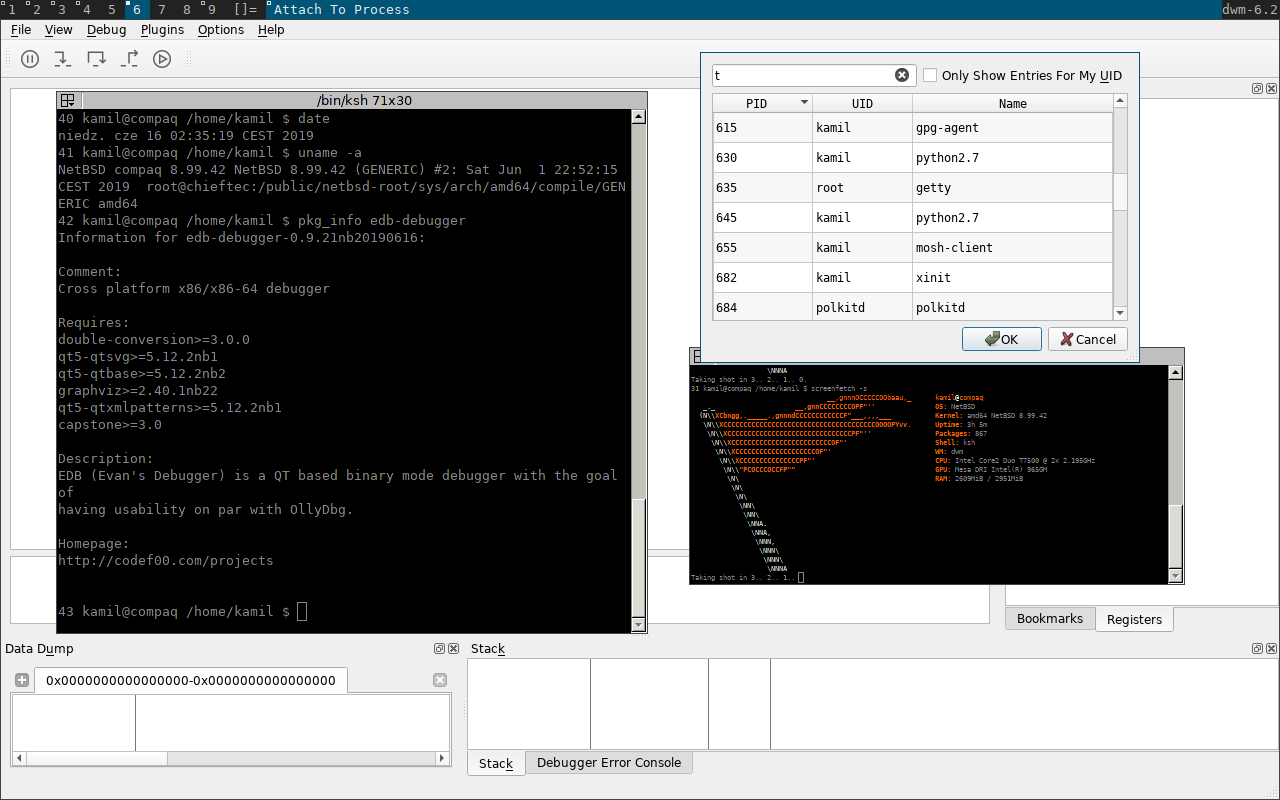

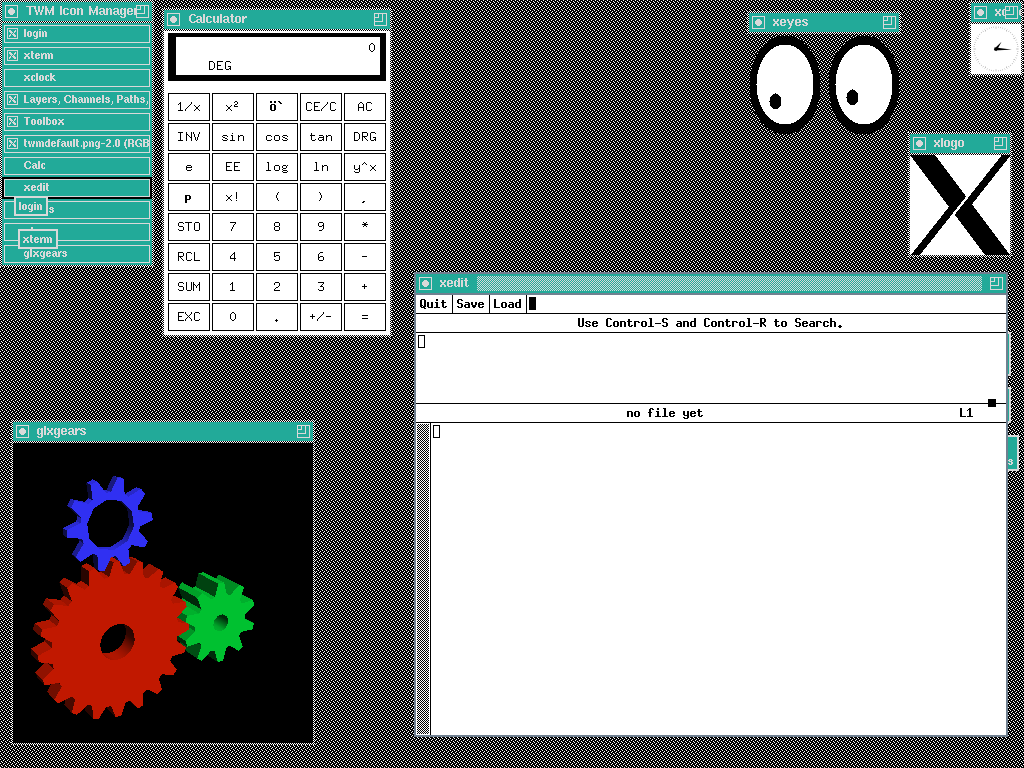

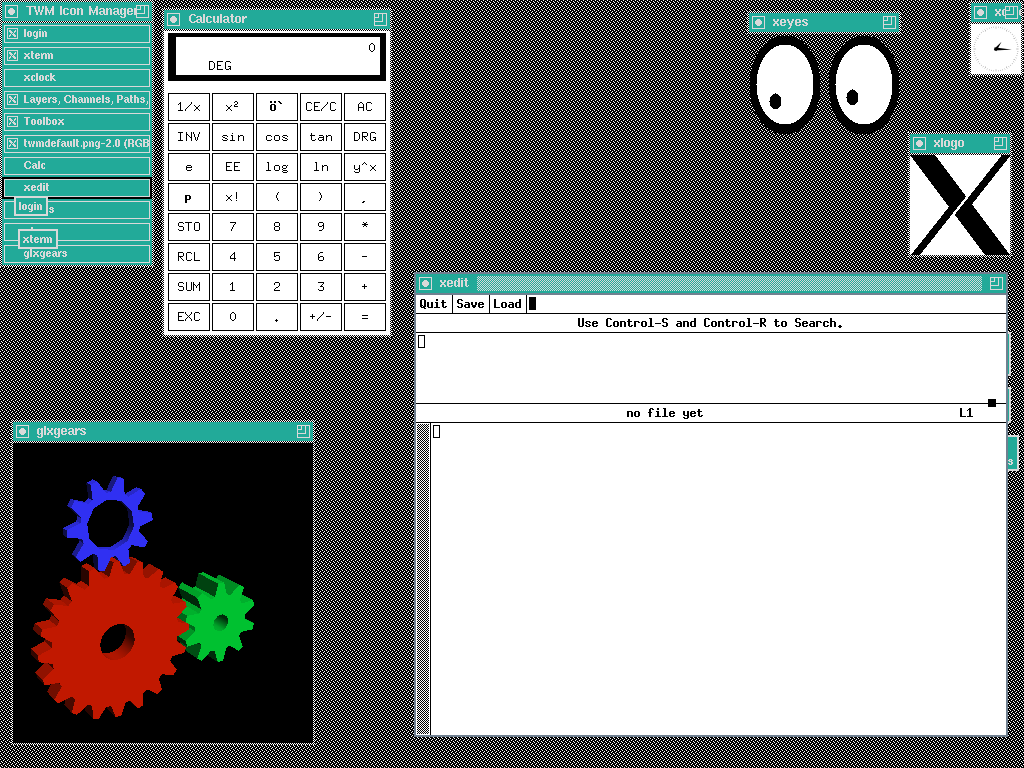

At the conference I presented my work on "The LLDB Debugger on NetBSD":

Plan for the next milestone

Resume porting tsan and msan to NetBSD with a plan to switch back to the LLDB porting.

This work was sponsored by The NetBSD Foundation.

The NetBSD Foundation is a non-profit organization and welcomes any donations to help us continue funding projects and services to the open-source community. Please consider visiting the following URL, and chip in what you can:

Tutorials

I came to Paris on Wednesday in order to participate in tutorials:

- Thursday - DTrace for Developers: no more printfs - by George Neville-Neil

- Friday - How to untangle your threads from a giant lock in a multiprocessor system - by Taylor R. Campbell

The NetBSD Summit

On Friday there was a NetBSD summit that gathered around 15 developers. I have not participated in it myself because of the tutorial on SMP. There were three presentations:

- News from the version control front - by joerg,

- Scripting DDB with Forth - by uwe,

- NetBSD on Google Compute Engine - by bsiegert.

Talks

During Saturday and Sunday there were several NetBSD and pkgsrc related presentations:

- A Modern Replacement for BSD spell(1) - Abhinav Upadhyay

- Portable Hotplugging: NetBSD's uvm_hotplug(9) API development - Cherry G. Mathew

- Hardening pkgsrc - Pierre Pronchery

- Reproducible builds on NetBSD - Christos Zoulas

- The school of hard knocks - PT1 - Sevan Janiyan

- The LLDB Debugger on NetBSD - Kamil Rytarowski

- What's in store for NetBSD 8.0? - Alistair Crooks

During the closing ceremony there was a speech by Alistair Crooks on behalf of The NetBSD Foundation.

LLDB

At the conference I presented my work on "The LLDB Debugger on NetBSD":

Plan for the next milestone

Resume porting tsan and msan to NetBSD with a plan to switch back to the LLDB porting.

This work was sponsored by The NetBSD Foundation.

The NetBSD Foundation is a non-profit organization and welcomes any donations to help us continue funding projects and services to the open-source community. Please consider visiting the following URL, and chip in what you can:

President: William J. Coldwell <billc>

Vice President: Jeremy C. Reed <reed>

Secretary: Christos Zoulas <christos>

Treasurer: Christos Zoulas <christos>

Assistant Secretary: Thomas Klausner <wiz>

Assistant Treasurer: Taylor R. Campbell <riastradh>

President: William J. Coldwell <billc>

Vice President: Jeremy C. Reed <reed>

Secretary: Christos Zoulas <christos>

Treasurer: Christos Zoulas <christos>

Assistant Secretary: Thomas Klausner <wiz>

Assistant Treasurer: Taylor R. Campbell <riastradh>

Let me tell you about my experience at

EuroBSDcon 2017

in Paris, France. We will

see what was presented during the NetBSD developer summit on Friday

and then we will give a look to all of the

NetBSD and

pkgsrc presentations given during

the conference session on Saturday and Sunday. Of course, a lot of

fun also happened on the "hall track", the several breaks

during the conference and the dinners we had together with other

*BSD developers and community! This is difficult to describe and

I will try to just share some part of that with photographs that

we have taken. I can just say that it was a really beautiful

experience, I had a great time with others and, after coming back

home... ...I miss all of that!  So, if you have never been in

any BSD conferences I strongly suggest you to go to the next ones,

so please stay tuned via

NetBSD Events.

Being there this is probably the only way to understand these feelings!

So, if you have never been in

any BSD conferences I strongly suggest you to go to the next ones,

so please stay tuned via

NetBSD Events.

Being there this is probably the only way to understand these feelings!

Thursday (21/09): NetBSD developers dinner

Arriving in Paris via a night train from Italy I

literally sleep-walked through Paris getting lost again and again.

After getting in touch with other developers we had a dinner together and went

sightseeing for a^Wseveral beers!

Friday (22/09): NetBSD developers summit

On Friday morning we met for the NetBSD developers summit kindly hosted by Arolla.

From left to right: alnsn, sborrill;

abhinav; uwe and leot;

christos, cherry, ast and

bsiegert; martin and khorben.

The devsummit was moderated by Jörg (joerg) and organized by

Jean-Yves (jym).

NetBSD on Google Compute Engine -- Benny Siegert (bsiegert)

After a self-presentation the devsummit presentations session started with the

talk presented by Benny (bsiegert) about NetBSD on Google

Compute Engine.

Benny first introduced Google Compute Engine (GCE) and then started describing how to run NetBSD on it.

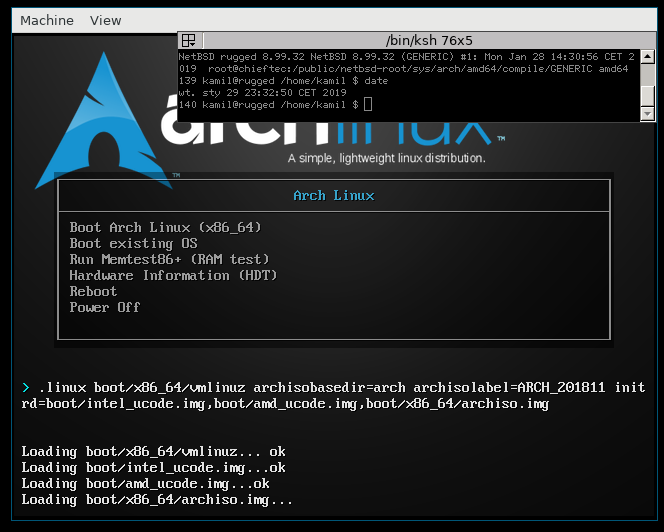

At the moment there are no official NetBSD images and so users need to create their own. However, netbsd-gce script completely automatize this process that:

- uses Anita to stage an installation in QEMU

- adjust several tweaks to ensure that networking and storage will work on GCE

- packs the image into a

.tar.gzfile

The .tar.gz image then just need to be uploaded to a Cloud Storage

bucket, create a GCE image from it and then launch VMs based on that image.

He also discussed about GCE instance metadata, several problems founds and how they were fixed (it's better to use NetBSD 8_BETA or -current!) and some future works.

For more information slides (PDF) of the talk are also available.

Scripting DDB with Forth -- Valery Ushakov (uwe)

Valery (uwe) presented a talk about Scripting DDB

with Forth. It was based on a long story and actually the

first discussion about it appeared on

tech-kern@

mailing list in his

Scripting DDB in Forth?

thread (ddb(4)

is the NetBSD in-kernel debugger).

He showed how one can associate forth commands/conditions with ddb breakpoints. He used "pid divisible by 3" as an example of condition for a breakpoint set in getpid(2) system call:

db{0}> forth

ok : field create , does> @ + ;

ok #300 field lwp>l_proc

ok #120 field proc>p_pid

ok : getpid curlwp lwp>l_proc @ proc>p_pid @ ;

ok : checkpid getpid dup ." > PID IS " . cr 3 mod 0= ;

ok bye

-- STACK: <empty>

db{0}> break sys_getpid_with_ppid

db{0}> command . = checkpid

db{0}> c

...and then on a shell:

# (:)

fatal breakpoint trap in supervisor mode

trap type 1 code 0 eip 0xc090df89 cs 0x8 eflags 0x246 cr2 0xad8ef2c0 ilevel 0 esp 0xc0157fbd

curlwp 0xc2b5c2c0 pid 798 lid 1 lowest kstack 0xdabb42c0

> PID IS 798

-- STACK:

0xffffffff -1

Breakpoint in pid 798.1 (ksh) at netbsd:sys_getpid_with_ppid: pushl %ebp

db{0}> c

# (:)

fatal breakpoint trap in supervisor mode

trap type 1 code 0 eip 0xc090df89 cs 0x8 eflags 0x246 cr2 0xad8ef2c0 ilevel 0 esp 0xc0157fbd

curlwp 0xc2b5c2c0 pid 823 lid 1 lowest kstack 0xdabb42c0

> PID IS 823

-- STACK:

0x00000000 0

Command returned 0

#

If you are more interested in this presentation I strongly suggest to also give

a look to uwe's

forth Mercurial repository.

News from the version control front -- Jörg Sonnenberger (joerg)

The third presentation of the devsummit was a presentation about the recent work done by

Jörg (joerg) in the VCS conversions.

Jörg started the presentation discussing about the infrastructure used for the CVS -> Fossil -> Git conversion and CVS -> Fossil -> Mercurial conversion.

It's worth also noticing that the Mercurial conversion is fully integrated and is regularly pushed to Bitbucket and src repository pushed some scalability limits to Bitbucket!

Mercurial performance were also compared to the Git ones in details for several operations.

A check list that compared the current status of the NetBSD VCS migration to the FreeBSD VCS wiki one was described and then Jörg discussed the pending work and answered several questions in the Q&A.

For more information please give a look to the

joerg's presentation slides (HTML).

If you would like to help for the VCS migration please also get in touch with

him!

Afternoon discussions and dinner

After the lunch we had several non-scheduled discussions, some time

for hacking, etc. We then had a nice dinner together (it was in a

restaurant with a very nice waiter who always shouted after

every order or after accidently dropping and crashing dishes!, yeah! That's

probably a bit weird but I liked that attitude!  and then did some

sightseeing and had a beer together.

and then did some

sightseeing and had a beer together.

From left to right: uwe, bad, ast,

leot, martin, abhinav,

sborrill, alnsn, spz.

From left to right: uwe, bad, ast,

christos, leot, martin,

sborrill, alnsn, spz.

Saturday (23/09): First day of conference session and Social Event

A Modern Replacement for BSD spell(1) -- Abhinav Upadhyay (abhinav)

Abhinav (abhinav) presented his work on the new

spell(1)

implementation he's working (that isn't just a spell replacement

but also a library that can be used by other programs!).

He described the current limitations of old spell(1) (to get an

idea please give a look to bin/48684),

described the project goals of the new spell(1), additions to

/usr/share/dict/words, digged a bit in the implementation and

discussed several algorithms used and then provided a performance comparison

with other popular free software spell checkers

(aspell,

hunspell and ispell).

He also showed an interactive demo of the new spell(1) in-action

integrated with a shell for auto-completion and spell check.

If you would like to try it please give a look to

nbspell Git repository

that contains the code and dicts for the new spell(1)!

Video recording (YouTube) of the talk and slides (PDF) are also available!

Portable Hotplugging: NetBSD's uvm_hotplug(9) API development -- Cherry G. Mathew (cherry)

Cherry (cherry) presented recent work done with Santhosh N.

Raju (fox) about

uvm_hotplug(9).

The talk covered most "behind the scenes" work: how TDD (test driven

development) was used, how uvm_hotplug(9) was designed

and implemented (with comparisons to the old implementation),

interesting edge cases during the development and how

atf(7)

was used to do performance testing.

It was very interesting to learn how Cherry and Santhosh worked on that and on the conclusion Cherry pointed out the importance of using existing Software Engineering techniques in Systems Programming.

Video recording (YouTube) and slides (PDF) of the talk are also available!

Hardening pkgsrc -- Pierre Pronchery (khorben)

Pierre (khorben) presented a talk about recent pkgsrc security

features added in the recent months (and most of them also active on the just

released pkgsrc-2017Q3!).

He first introduced how security management and releng is handled

in pkgsrc, how to use

pkg_admin(1)

fetch-pkg-vulnerabilities and audit

commands, etc.

Then package signatures (generation, installation) and recent hardening features in pkgsrc were discussed in details, first introducing them and then how pkgsrc handles them:

- SSP: Stack Smashing Protection (enabled via

PKGSRC_USE_SSPinmk.conf) - Fortify (enabled via

PKGSRC_USE_FORTIFYinmk.conf) - Stack check (enabled via

PKGSRC_USE_STACK_CHECKinmk.conf) - Position-Independent Executables (PIE) (enabled via

PKGSRC_MKPIEinmk.conf) - RELRO and BIND_NOW (enabled via

PKGSRC_USE_RELROinmk.conf)

Challenges for each hardening features and future works were discussed.

For more information video recording (YouTube) and slides (PDF) of the talk are available. A good introduction and reference for all pkgsrc hardening features is the Hardening packages wiki page.

Reproducible builds on NetBSD -- Christos Zoulas (christos)

Christos (christos) presented the work about reproducible builds on

NetBSD.

In his talk he first provided a rationale about reproducible builds (to learn

more please give a look to

reproducible-builds.org!), he

then discussed about the NetBSD (cross) build process, the

current status

and build variations that are done in the tests.reproducible-builds.org

build machines.

Then he provided and described several sources of difference that were present in non-reproducible builds, like file-system timestamps, parallel builds headaches due directory/build order, path normalization, etc. For each of them he also discussed in details how these problems were solved in NetBSD.

In the conclusion the status and possible TODOs were also discussed (please note

that both -current and -8 are all built with

reproducible flags (-P option of build.sh)!)

Video recording (YouTube) of Christos' talk is available. Apart the resources discussed above a nice introduction to reproducible builds in NetBSD is also the NetBSD fully reproducible builds blog post written by Christos last February!

Social event

The social event on Saturday evening took place on a boat that cruised on the Seine river.

It was a very nice and different way to sightsee Paris, eat and enjoy some drinks and socialize and discuss with other developers and community.

Sunday (24/09): Second day of conference session

The school of hard knocks - PT1 -- Sevan Janiyan (sevan)

Sevan (sevan) presented a talk about several notes and lessons learnt

whilst running tutorials to introduce NetBSD at several events

(OSHUG #46 and

OSHUG #57 and

#58) and experiences from past events

(Chiphack 2013).

He described problems a user may experience and how NetBSD was introduced, in particular trying to avoid the steep learning curve involved when experimenting with operating systems as a first step, exploring documentation/source code, cross-building, scripting in high-level programming languages (Lua) and directly prototyping and getting pragmatic via rump.

Video recording (YouTube) of Sevan's talk and slides (HTML) are available.

The LLDB Debugger on NetBSD -- Kamil Rytarowski (kamil)

Kamil (kamil) presented a talk about the recent LLDB debugger and a

lot of other related debuggers (but also

non-strictly-related-to-debugging!) works he's doing in the last

months.

He first introduced debugging concepts in general, provided several examples and then he started discussing LLDB porting to NetBSD.

He then discussed about

ptrace(2)

and other introspection interfaces, the several improvements done and tests

added for ptrace(2) in

atf(7).

He also discussed about tracking LLDB's trunk (if you are more curious please

give a look to wip/llvm-git,

wip/clang-git, wip/lldb-git packages in pkgsrc-wip!) and about LLVM sanitizers

and their current status in NetBSD.

In the conclusion he also discussed various TODOs in these areas.

Video recording (YouTube)

and slides (HTML)

of Kamil's talk are available.

Kamil also regularly write status update blog posts on

blog.NetBSD.org and

tech-toolchain@ mailing

list, so please stay tuned!

What's in store for NetBSD 8.0? -- Alistair Crooks (agc)

Alistair (agc) presented a talk about what we will see in NetBSD

8.0.

He discussed about new hardware supported (really "new", not new "old" hardware!

Of course also support for VAXstation 4000 TURBOchannel USB and GPIO is actually

new hardware as well! :)), LLVM/Clang, virtualization, PGP signing, updated

utilities in NetBSD, new networking features (e.g. bouyer's

sockcan implementation), u-boot,

dtrace(1),

improvements and new ports testing, reproducible builds,

FDT (Flattened Device Tree) and a lot of other news!

The entire presentation was done using the Socratic method (Q&A) and it was very interactive and nice!

Video recording (YouTube) and slides (PDF) of Alistair's talk are available.

Sunday dinner

After the conference we did some sightseeing in Paris, had a dinner together and then enjoyed some beers!

On the left side: abhinav, ast, seb, christos

On the right side: leot, Riastradh, uwe, sevan, agc, sborrill

On the left side: martin, ast, seb, christos

On the right side: leot, Riastradh, uwe, sevan, agc, sborrill

Conclusion

It was a very nice weekend and conference. It is worth to mention that EuroBSDcon 2017 was the biggest BSD conference (more than 300 people attended it!).

I would like to thank the entire EuroBSDcon organising committee (Baptiste Daroussin, Antoine Jacoutot, Jean-Sébastien Pédron and Jean-Yves Migeon), EuroBSDcon programme commitee (Antoine Jacoutot, Lars Engels, Ollivier Robert, Sevan Janiyan, Jörg Sonnenberger, Jasper Lievisse Adriaanse and Janne Johansson) and EuroBSDcon Foundation for organizing such a wonderful conference!

I also would like to thank the speakers for presenting very interesting talks, all developers and community that attended the NetBSD devsummit and conference, in particular Jean-Yves and Jörg, for organizing and moderating the devsummit and Arolla that kindly hosted us for the NetBSD devsummit!

A special thanks also to Abhinav (abhinav) and Martin

(martin) for photographs and locals Jean-Yves (jym)

and Stoned (seb) for helping us in not get lost in Paris'

rues!

Thank you!

Let me tell you about my experience at

EuroBSDcon 2017

in Paris, France. We will

see what was presented during the NetBSD developer summit on Friday

and then we will give a look to all of the

NetBSD and

pkgsrc presentations given during

the conference session on Saturday and Sunday. Of course, a lot of

fun also happened on the "hall track", the several breaks

during the conference and the dinners we had together with other

*BSD developers and community! This is difficult to describe and

I will try to just share some part of that with photographs that

we have taken. I can just say that it was a really beautiful

experience, I had a great time with others and, after coming back

home... ...I miss all of that!  So, if you have never been in

any BSD conferences I strongly suggest you to go to the next ones,

so please stay tuned via

NetBSD Events.

Being there this is probably the only way to understand these feelings!

So, if you have never been in

any BSD conferences I strongly suggest you to go to the next ones,

so please stay tuned via

NetBSD Events.

Being there this is probably the only way to understand these feelings!

Thursday (21/09): NetBSD developers dinner

Arriving in Paris via a night train from Italy I

literally sleep-walked through Paris getting lost again and again.

After getting in touch with other developers we had a dinner together and went

sightseeing for a^Wseveral beers!

Friday (22/09): NetBSD developers summit

On Friday morning we met for the NetBSD developers summit kindly hosted by Arolla.

From left to right: alnsn, sborrill;

abhinav; uwe and leot;

christos, cherry, ast and

bsiegert; martin and khorben.

The devsummit was moderated by Jörg (joerg) and organized by

Jean-Yves (jym).

NetBSD on Google Compute Engine -- Benny Siegert (bsiegert)

After a self-presentation the devsummit presentations session started with the

talk presented by Benny (bsiegert) about NetBSD on Google

Compute Engine.

Benny first introduced Google Compute Engine (GCE) and then started describing how to run NetBSD on it.

At the moment there are no official NetBSD images and so users need to create their own. However, netbsd-gce script completely automatize this process that:

- uses Anita to stage an installation in QEMU

- adjust several tweaks to ensure that networking and storage will work on GCE

- packs the image into a

.tar.gzfile

The .tar.gz image then just need to be uploaded to a Cloud Storage

bucket, create a GCE image from it and then launch VMs based on that image.

He also discussed about GCE instance metadata, several problems founds and how they were fixed (it's better to use NetBSD 8_BETA or -current!) and some future works.

For more information slides (PDF) of the talk are also available.

Scripting DDB with Forth -- Valery Ushakov (uwe)

Valery (uwe) presented a talk about Scripting DDB

with Forth. It was based on a long story and actually the

first discussion about it appeared on

tech-kern@

mailing list in his

Scripting DDB in Forth?

thread (ddb(4)

is the NetBSD in-kernel debugger).

He showed how one can associate forth commands/conditions with ddb breakpoints. He used "pid divisible by 3" as an example of condition for a breakpoint set in getpid(2) system call:

db{0}> forth

ok : field create , does> @ + ;

ok #300 field lwp>l_proc

ok #120 field proc>p_pid

ok : getpid curlwp lwp>l_proc @ proc>p_pid @ ;

ok : checkpid getpid dup ." > PID IS " . cr 3 mod 0= ;

ok bye

-- STACK: <empty>

db{0}> break sys_getpid_with_ppid

db{0}> command . = checkpid

db{0}> c

...and then on a shell:

# (:)

fatal breakpoint trap in supervisor mode

trap type 1 code 0 eip 0xc090df89 cs 0x8 eflags 0x246 cr2 0xad8ef2c0 ilevel 0 esp 0xc0157fbd

curlwp 0xc2b5c2c0 pid 798 lid 1 lowest kstack 0xdabb42c0

> PID IS 798

-- STACK:

0xffffffff -1

Breakpoint in pid 798.1 (ksh) at netbsd:sys_getpid_with_ppid: pushl %ebp

db{0}> c

# (:)

fatal breakpoint trap in supervisor mode

trap type 1 code 0 eip 0xc090df89 cs 0x8 eflags 0x246 cr2 0xad8ef2c0 ilevel 0 esp 0xc0157fbd

curlwp 0xc2b5c2c0 pid 823 lid 1 lowest kstack 0xdabb42c0

> PID IS 823

-- STACK:

0x00000000 0

Command returned 0

#

If you are more interested in this presentation I strongly suggest to also give

a look to uwe's

forth Mercurial repository.

News from the version control front -- Jörg Sonnenberger (joerg)

The third presentation of the devsummit was a presentation about the recent work done by

Jörg (joerg) in the VCS conversions.

Jörg started the presentation discussing about the infrastructure used for the CVS -> Fossil -> Git conversion and CVS -> Fossil -> Mercurial conversion.

It's worth also noticing that the Mercurial conversion is fully integrated and is regularly pushed to Bitbucket and src repository pushed some scalability limits to Bitbucket!

Mercurial performance were also compared to the Git ones in details for several operations.

A check list that compared the current status of the NetBSD VCS migration to the FreeBSD VCS wiki one was described and then Jörg discussed the pending work and answered several questions in the Q&A.

For more information please give a look to the

joerg's presentation slides (HTML).

If you would like to help for the VCS migration please also get in touch with

him!

Afternoon discussions and dinner

After the lunch we had several non-scheduled discussions, some time

for hacking, etc. We then had a nice dinner together (it was in a

restaurant with a very nice waiter who always shouted after

every order or after accidently dropping and crashing dishes!, yeah! That's

probably a bit weird but I liked that attitude!  and then did some

sightseeing and had a beer together.

and then did some

sightseeing and had a beer together.

From left to right: uwe, bad, ast,

leot, martin, abhinav,

sborrill, alnsn, spz.

From left to right: uwe, bad, ast,

christos, leot, martin,

sborrill, alnsn, spz.

Saturday (23/09): First day of conference session and Social Event

A Modern Replacement for BSD spell(1) -- Abhinav Upadhyay (abhinav)

Abhinav (abhinav) presented his work on the new

spell(1)

implementation he's working (that isn't just a spell replacement

but also a library that can be used by other programs!).

He described the current limitations of old spell(1) (to get an

idea please give a look to bin/48684),

described the project goals of the new spell(1), additions to

/usr/share/dict/words, digged a bit in the implementation and

discussed several algorithms used and then provided a performance comparison

with other popular free software spell checkers

(aspell,

hunspell and ispell).

He also showed an interactive demo of the new spell(1) in-action

integrated with a shell for auto-completion and spell check.

If you would like to try it please give a look to

nbspell Git repository

that contains the code and dicts for the new spell(1)!

Video recording (YouTube) of the talk and slides (PDF) are also available!

Portable Hotplugging: NetBSD's uvm_hotplug(9) API development -- Cherry G. Mathew (cherry)

Cherry (cherry) presented recent work done with Santhosh N.

Raju (fox) about

uvm_hotplug(9).

The talk covered most "behind the scenes" work: how TDD (test driven

development) was used, how uvm_hotplug(9) was designed

and implemented (with comparisons to the old implementation),

interesting edge cases during the development and how

atf(7)

was used to do performance testing.

It was very interesting to learn how Cherry and Santhosh worked on that and on the conclusion Cherry pointed out the importance of using existing Software Engineering techniques in Systems Programming.

Video recording (YouTube) and slides (PDF) of the talk are also available!

Hardening pkgsrc -- Pierre Pronchery (khorben)

Pierre (khorben) presented a talk about recent pkgsrc security

features added in the recent months (and most of them also active on the just

released pkgsrc-2017Q3!).

He first introduced how security management and releng is handled

in pkgsrc, how to use

pkg_admin(1)

fetch-pkg-vulnerabilities and audit

commands, etc.

Then package signatures (generation, installation) and recent hardening features in pkgsrc were discussed in details, first introducing them and then how pkgsrc handles them:

- SSP: Stack Smashing Protection (enabled via

PKGSRC_USE_SSPinmk.conf) - Fortify (enabled via

PKGSRC_USE_FORTIFYinmk.conf) - Stack check (enabled via

PKGSRC_USE_STACK_CHECKinmk.conf) - Position-Independent Executables (PIE) (enabled via

PKGSRC_MKPIEinmk.conf) - RELRO and BIND_NOW (enabled via

PKGSRC_USE_RELROinmk.conf)

Challenges for each hardening features and future works were discussed.